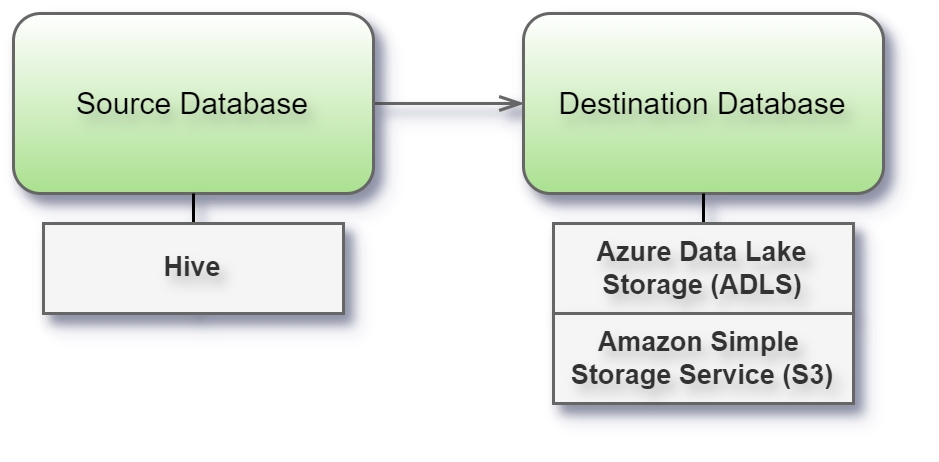

Hive

Before you begin

Before you begin, gather this connection information:

Name of the server that hosts the database you want to connect to and port number

User name and password

Are you connecting to an SSL server?

Connect and set up the workspace

Launch Syntho and select Connect to a database, or Create workspace. Then select Hive from Type under The connection details. For a complete list of data connections, click Type under The connection details. Then do the following:

Enter the name of the server that hosts the database and the port number to use.

Optionally, enter the schema name.

Enter user name and password.

Select the Require SSL check box when connecting to an SSL server.

Select Create Workspace.

If Syntho can't make the connection, verify that your credentials are correct. If you still can't connect, your computer is having trouble locating the server. Contact your network administrator or database administrator.

Considerations: Handling Hive database partitioning

In Hive databases, source tables are often partitioned based on three columns treated as index columns. These columns are used for ordering in queries, but they do not always form unique composites.

To address this, use the partitioning columns along with the additional columns specified through the "ORDER BY" dropdown. This approach ensures unique and consistent ordering, leveraging both the partitioning logic and user-defined columns. For more information, check ORDER BY in table settings.

Supported data types

BINARY

False

False

False

False

Was this helpful?