Databricks

Source and Destination Databases

Important

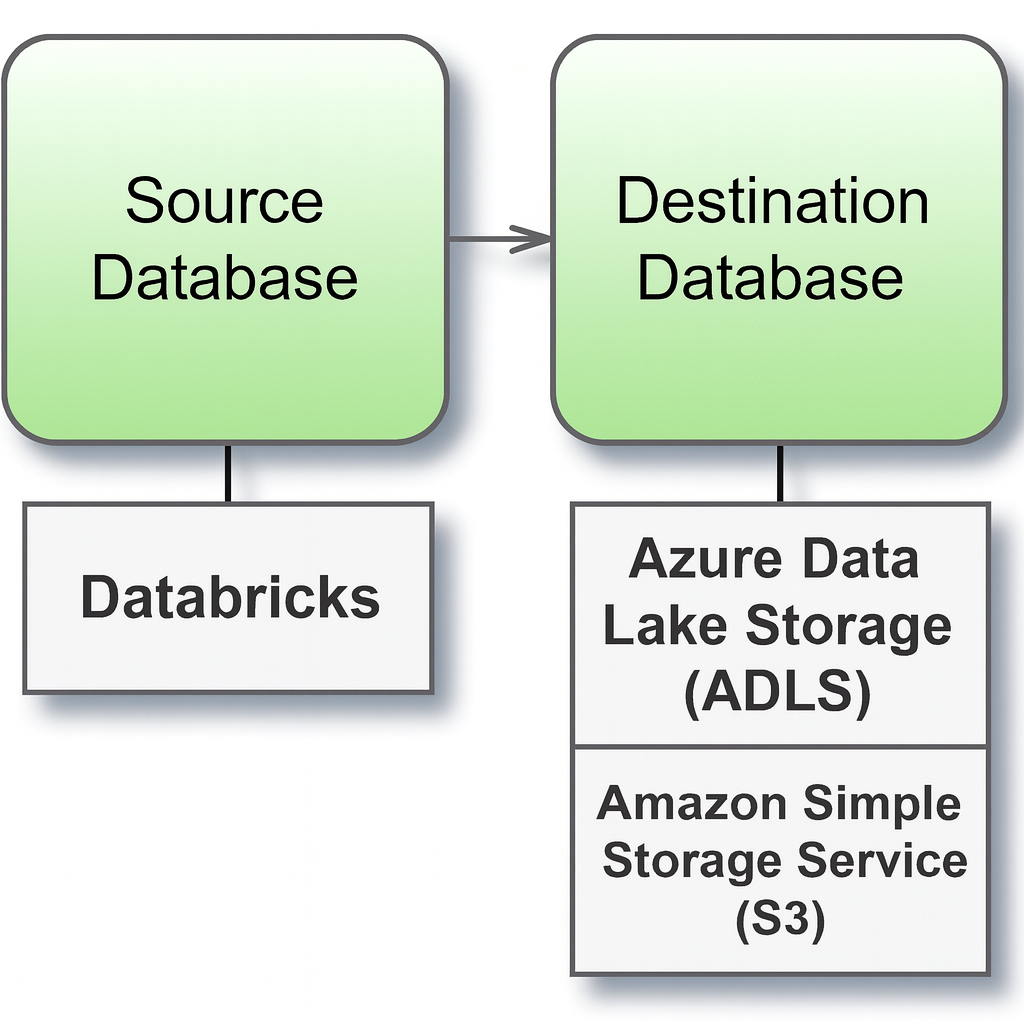

This connector can only be used as a source database. The generated data can be written to Local Filesystem, Azure Data Lake Storage (ADLS) or Amazon Simple Storage Service (S3) as Parquet files.

Before you begin

Before you begin, gather this connection information:

Name of the server that hosts the database you want to connect to and port number

The name of the database that you want to connect to

HTTP path to the data source

Personal Access Token

In Databricks, find your cluster server hostname and HTTP path using the instructions in Construct the JDBC URL on the Databricks website.

Connect and set up the workspace

Launch Syntho and select Connect to a database, or Create workspace. Then select Databricks from Type under The connection details. For a complete list of data connections, click Type under The connection details. Then do the following:

Launch Syntho and select Connect to a database (or Create workspace).

Under The connection details, choose Databricks from the Type dropdown.

Fill in the required fields:

Server hostname → e.g.

adb-1111111111111111.0.azuredatabricks.netCatalog name → e.g.

demo_catalogDatabase name → e.g.

marketing_dbHTTP Path → e.g.

sql/protocolv1/o/1234567890123456/0000-111111-demo123Port number → default is

443Personal Access Token → (See Personal Access Tokens on the Databricks website for information on access tokens.)

Click Create Workspace to complete the setup. If Syntho can't make the connection, verify that your credentials are correct. If issues persist, your computer may not be able to locate the server. Contact your network administrator or database administrator for support.

Supported Databrick versions

The table below provides an overview of the supported Databricks versions and their corresponding Apache Spark versions.

16.2

3.5.0

15.4 LTS

3.5.0

14.3 LTS

3.5.0

Note: Version 13 is no longer supported.

Supported data types

The following table summarizes the current support limitations for various data types when using connectors with Databricks. It indicates what's supported per generator type.

Supported data types

The following table summarizes the current support limitations for various data types when using connectors with Databricks. It indicates what's supported per generator type.

BINARY

False

True*

True*

True*

ARRAY

False

True*

True*

True*

MAP

False

True*

True*

True*

VARIANT

False

True*

True*

True*

OBJECT

False

True*

True*

True*

ENUM

False

False

False

False

*Some data types are not actively supported; however, certain generators such as AI synthesize, mask, mockers, or calculated columns may still show 'True' for these fields. This means the generators can be applied, even though the types are not actively supported. Duplication is fully supported for these data types.

Limitations

When entering database or schema names, use lowercase letters. Names containing capital letters must be entered in lowercase to ensure a proper connection.

Schema, table and column names with single quotation marks (`) or backtick (') symbols are not supported.

Was this helpful?