If your raw data involves a series of events in a single table, you should separate it into an entity table and a linked table. Follow the below steps, to achieve this.

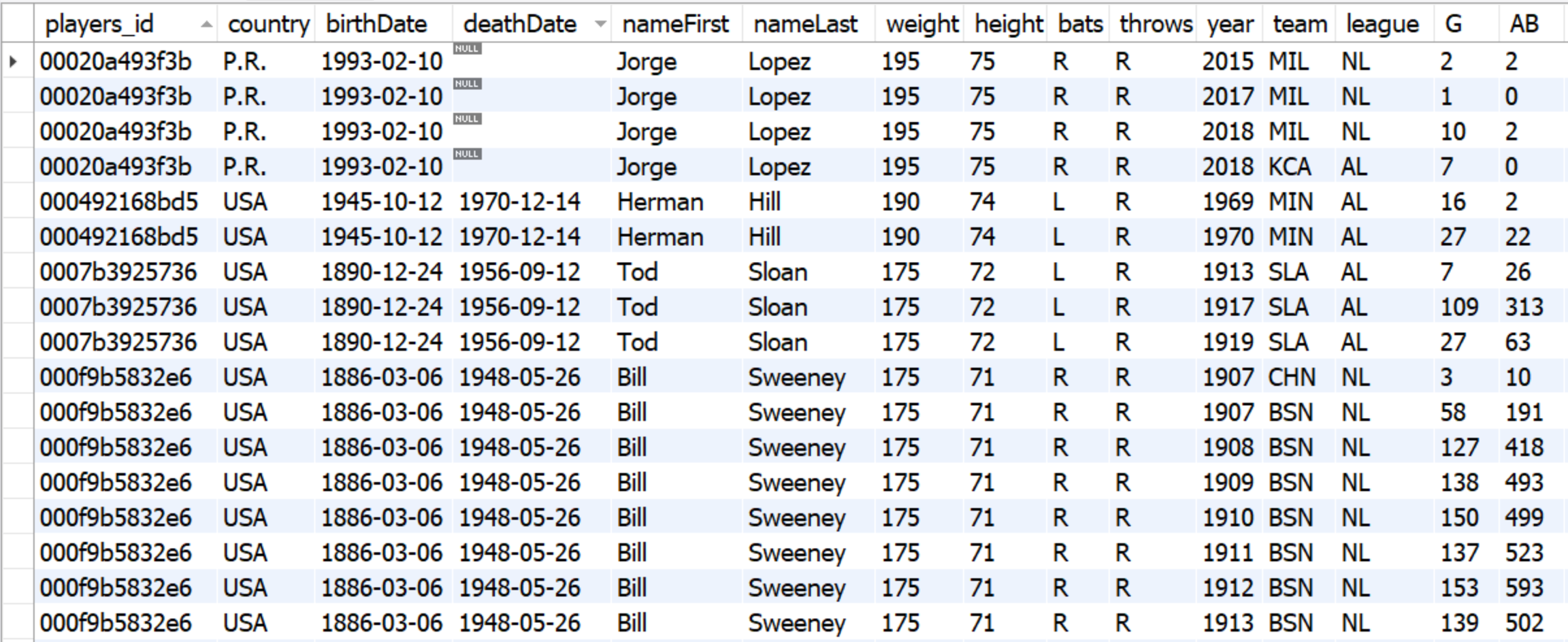

For example, the below table has a series of events (baseball players information and statistics every season).

Basbeall players and their statistics in one table 1. Split single sequential datasets into entity and linked tables

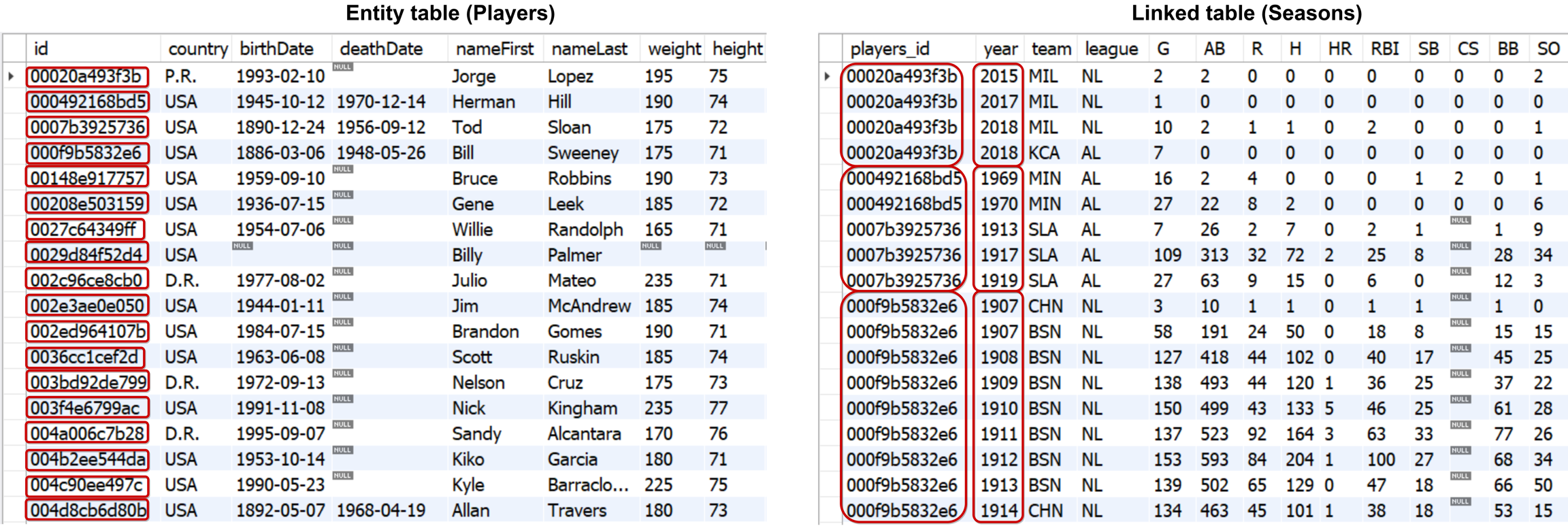

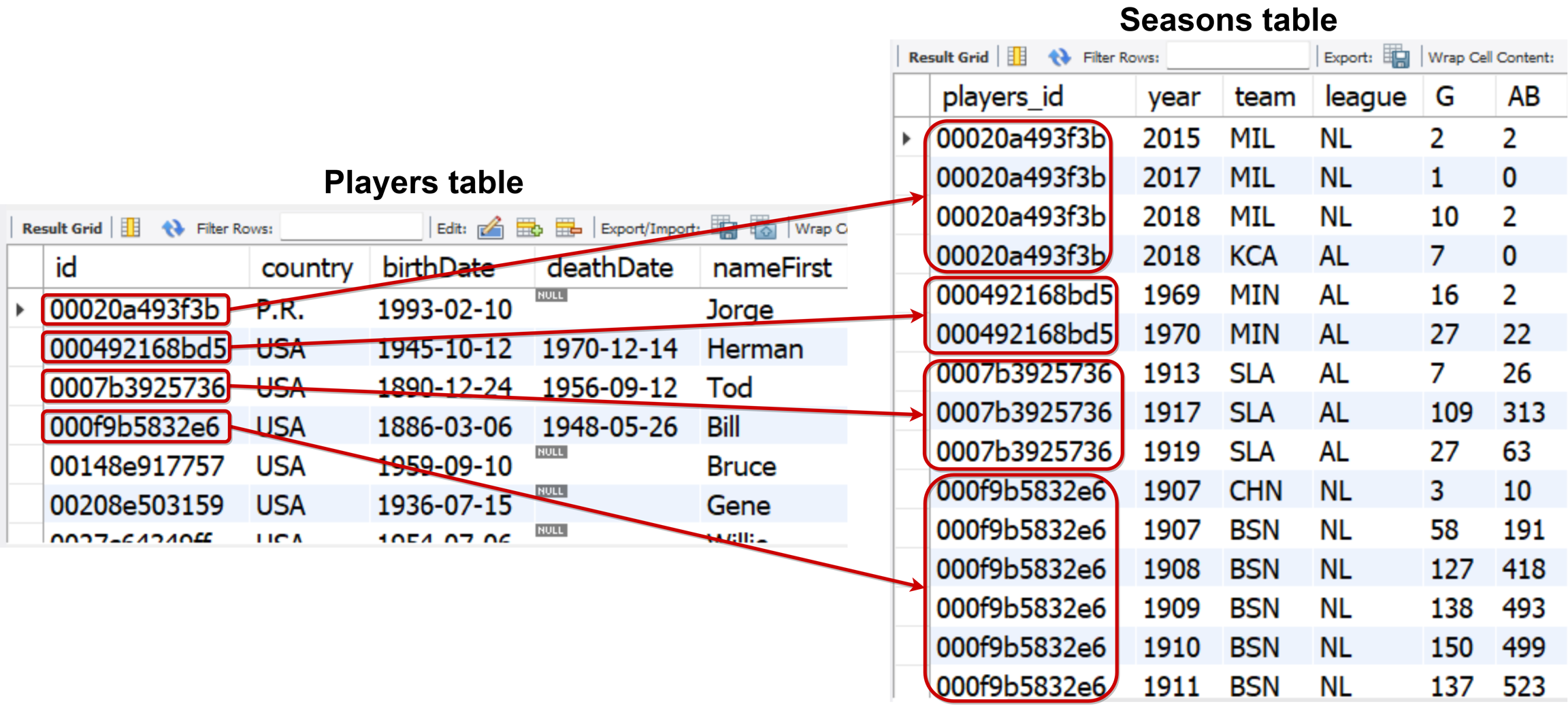

Relocate the event data to another table, ensuring that this new table is connected to the entity table via a foreign key that corresponds to the entity table's primary key. In this setup, each individual or entity listed in the entity table has a corresponding ID in the linked table.

The arrangement of your sequential data is crucial. If your event data exists in columns, you should reshape them into rows, where each row describes a unique event.

How to Split Data into Entity and Events

Examples of common datasets designed for a range of applications include:

Patient journeys where a table of medical events are linked to individual patients.

Various types of sensor readings where an entity table lists sensors, and the linked table records readings associated with those sensors.

In e-commerce, synthetic data often originates from purchase datasets where entity tables contain customer information, and linked tables store the purchases made by those customers.

These are chronologically ordered, sequential datasets, where the sequence and timing of events provide important insights.

When organizing your datasets for further processing, adhere to these requirements:

Each row represents a unique individual

Multiple rows can correspond to the same individual

Must have a unique entity ID (primary key)

Each row should link to a unique ID in the entity table (foreign key)

Rows are independent of each other

Multiple rows can be interrelated

Contains only static information

Contains only dynamic information; sequences should be time-ordered if possible

One table was separated into entity and linked tables, showing static (players) and dynamic (seasons) information, respectively 2. Transfer all static data to the entity table

Inspect your linked table containing events. If it includes static information describing the entity, this should be moved to the entity table. For instance, consider an e-commerce scenario where each purchase event belongs to specific customers. The customer's email remains the same across various events. It's static and characterizes the customer, not the event. In such a case, the email_address column should be transferred to the entity table.

Another example can be a baseball players table and table showing their statistics per season. In this case, baseball players should be considered as an entity table since baseball players table will have primary key (player id), rows will be independent of each other and represent unique individual and contains static information. On the other hand, seasons table will have different rows devoted to one individual since one baseball player can play in more than one season. Also seasons table has unique ID in the entity table (foreign key) and it contains time-ordered dynamic information. See illustration below.

Illustration showing one-to-many relationship of players and seasons tables