Introduction to data generators

The Syntho platform offers various data generators for diverse scenarios, taking into account the data's nature, privacy concerns, and specific use cases, allowing users to select the most appropriate options. The summary table provides an overview of these methods, detailing their relevance and use-case scenarios below. You can select any of the data generators to be forwarded to the detailed user guide sections.

The below features are key for the smart de-identification and rule-based synthetic data methods.

Training a generative AI model on the original data to generate new rows that mimic, but have no 1-to-1 relation with original rows.

To generate synthetic feature dataset for ML model development

When statistical accuracy and maximum privacy are needed

To expand dataset rows while maintaining original statistical properties

When working with multiple related tables

When data consistency across systems is required

When you need to be able to revert to original records

If entirely new, unseen text values must be generated

Generating entirely new, user-defined values

For custom data generation without regard to preserving original column value relationships

When you need to maintain relationships with original data

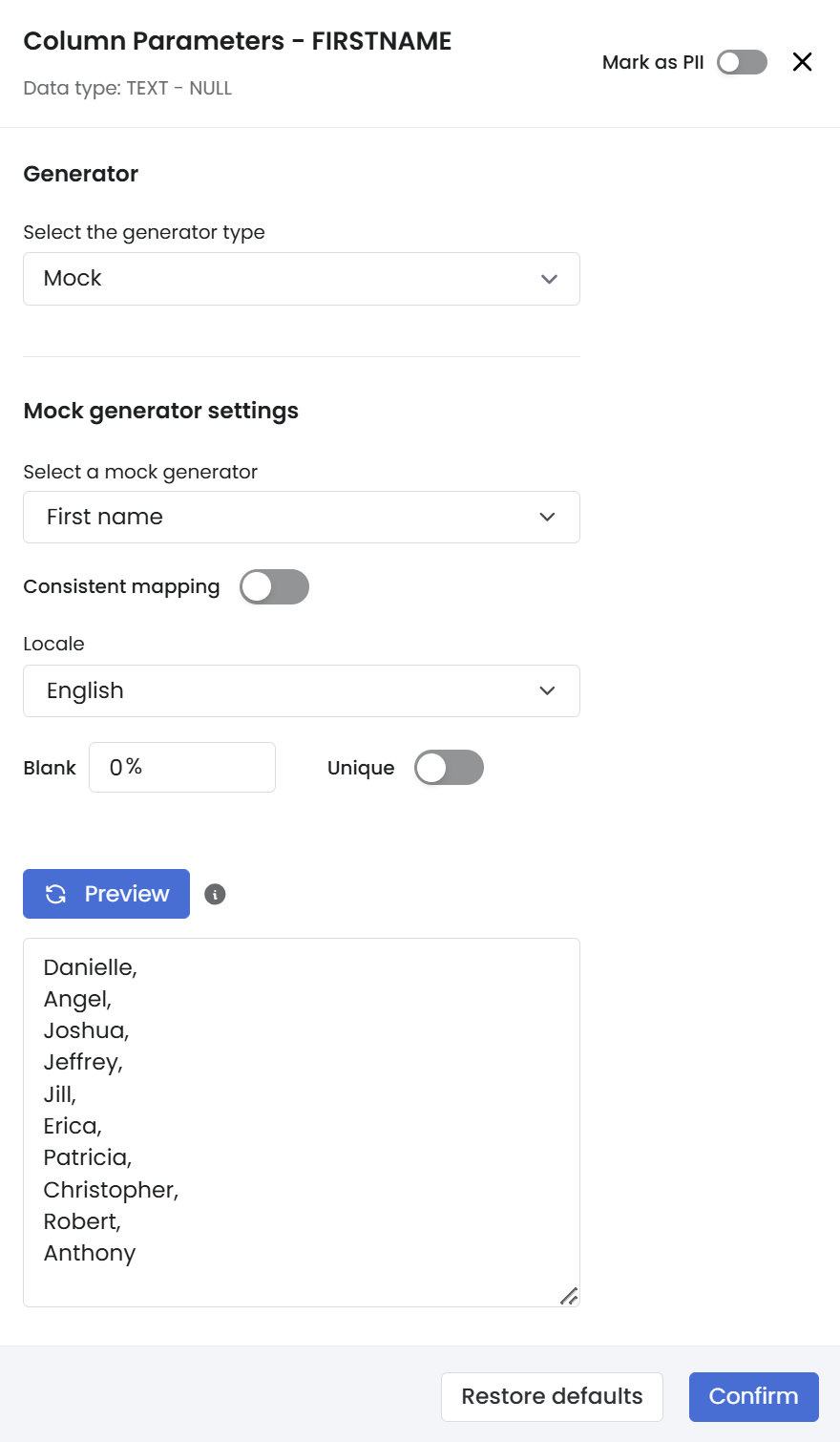

To generate mock values that are consistently mapped from original values (e.g. Hank always becomes Jeffrey)

To ensure data consistency across tables, systems and data generation jobs

If fully random data, without consistency is desired

Anonymizes data by modifying values directly while preserving the format

When data needs to remain recognizable in format. For anonymizing PII fields in non-production environments

When preserving exact relationships or values is required.

Generating user-defined values based on custom logic

For complex data manipulations requiring specific business logic

For simple data generation tasks that don't need custom logic

Generates unique keys to ensure referential integrity across related tables.

When working with multiple tables needing unique keys for foreign-key relationships.

If relationships or foreign keys are not required.

Automatic discovery of most sensitive (i.e. PII/PHI) columns in you database

To discover most sensitive columns (i.e. PII / PHI)

When your data is not sensitive

Comparison of data generated with different generators

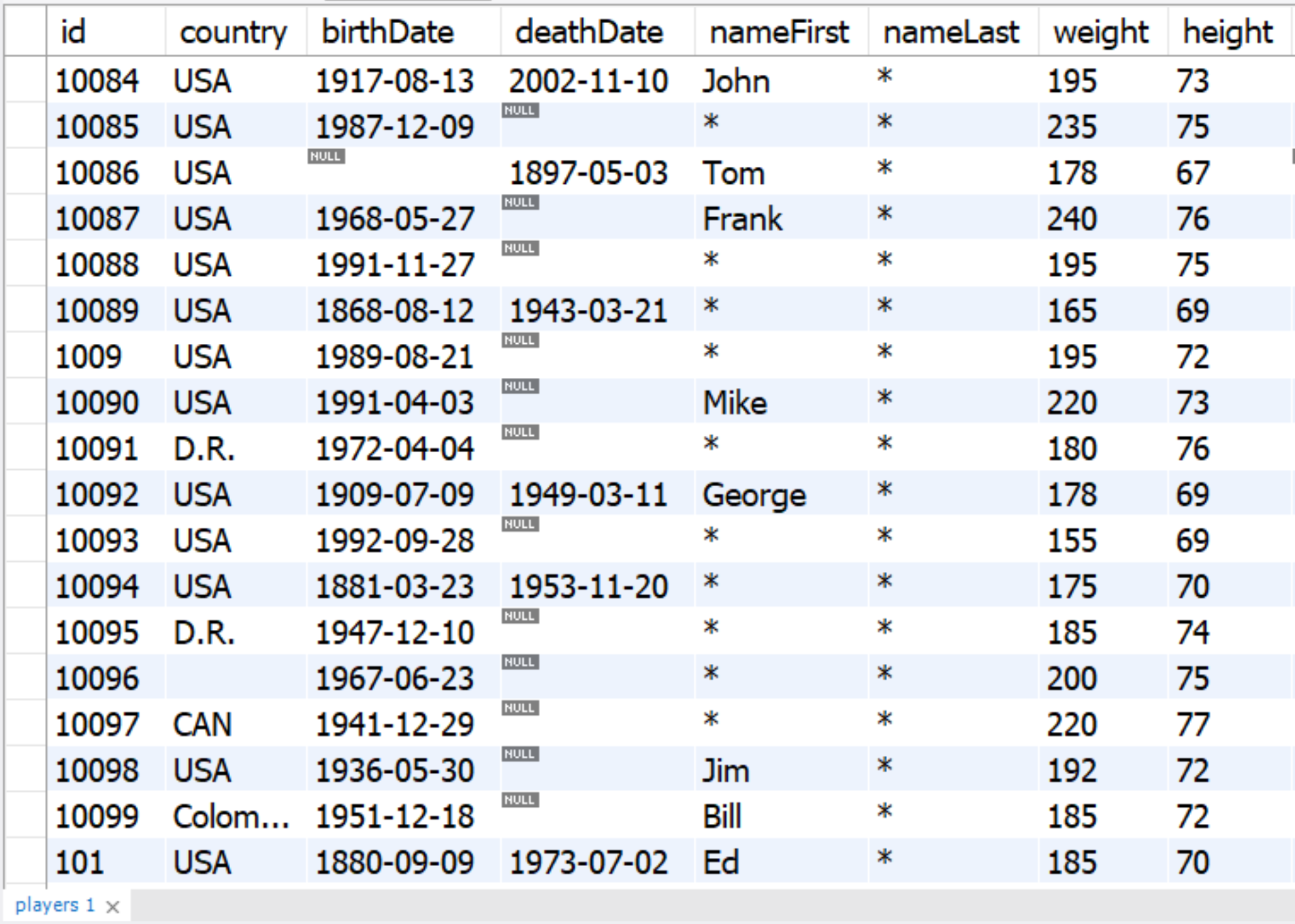

We demonstrate the application of each generators on a real baseball dataset, which includes players and seasons tables.

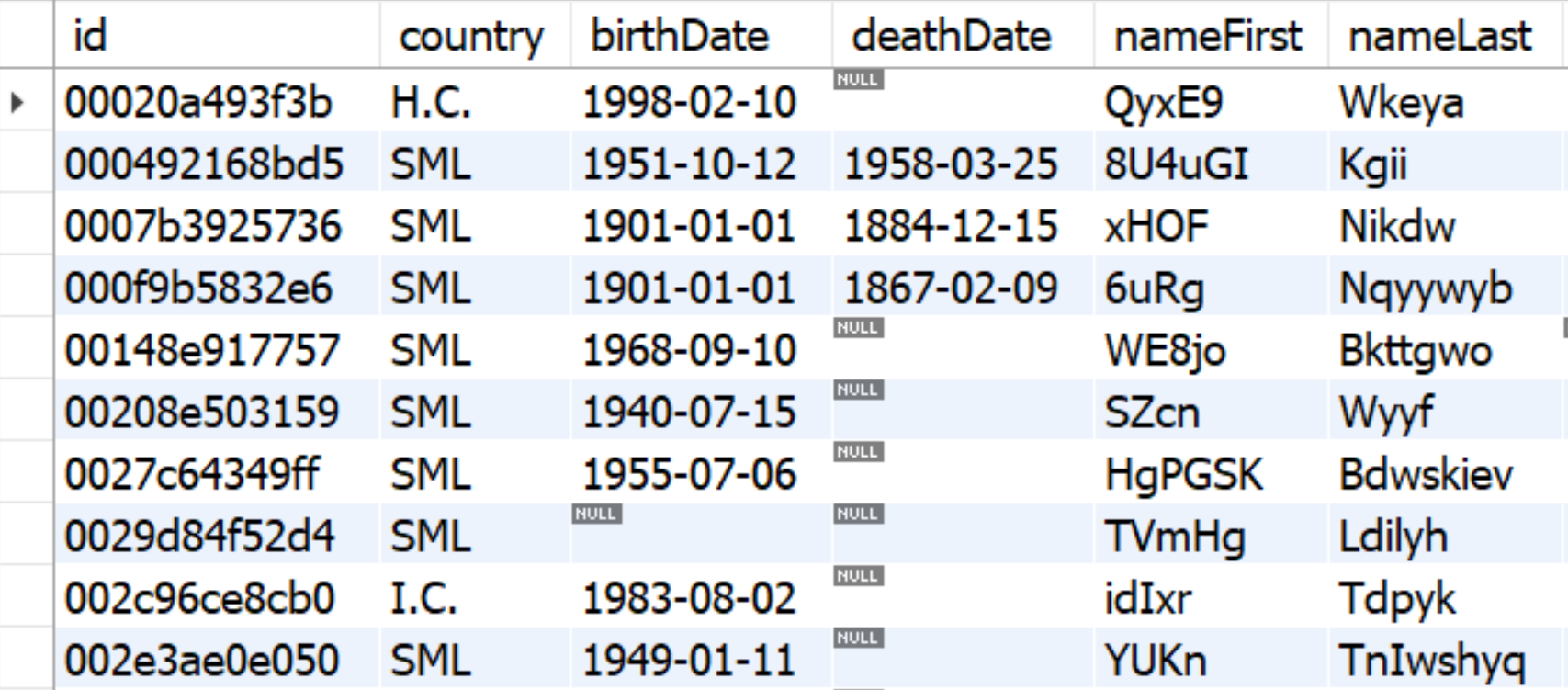

AI-generated synthetic data is applied to players table

In the first example, we see that an entirely new synthetic dataset was generated by the generative AI model based on the original dataset. The synthetic dataset preserves the statistics of the original dataset, but there is no 1:to:1 correspondence of synthetic records and original records. Note that for AI-generated synthetic data, a rare category replacement value of 10 was applied. This means that any name appearing fewer than 10 times in the nameFirstand nameLast columns was replaced with an asterisk to protect privacy.

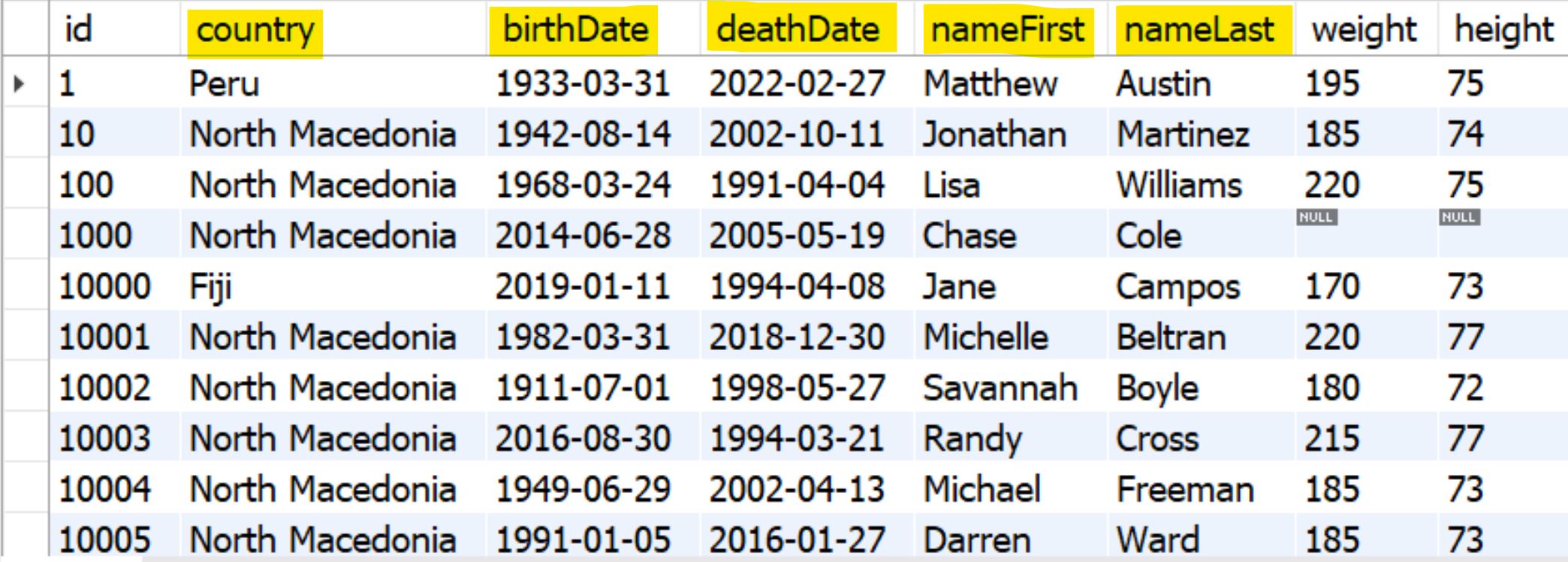

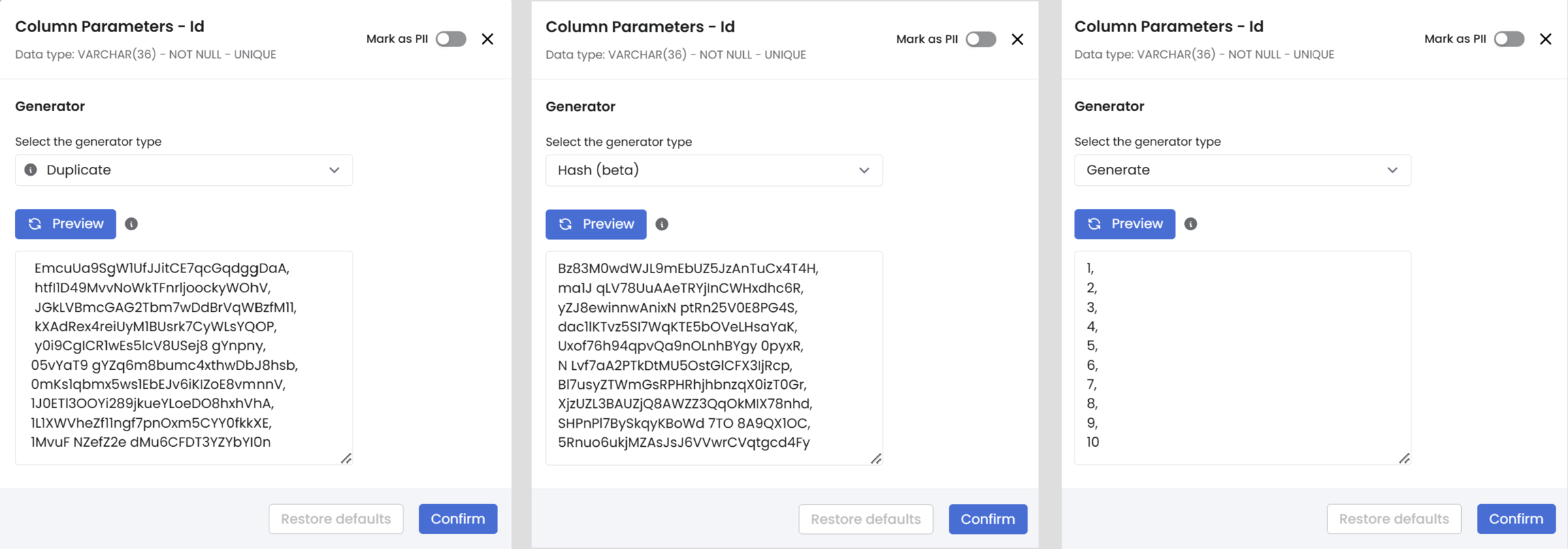

Mockers are applied to players table

Mockers are applied to specific columns in the players table, which are highlighted in yellow in the table above: 'country', 'birthDate', 'deathDate', 'nameFirst', and 'nameLast'.

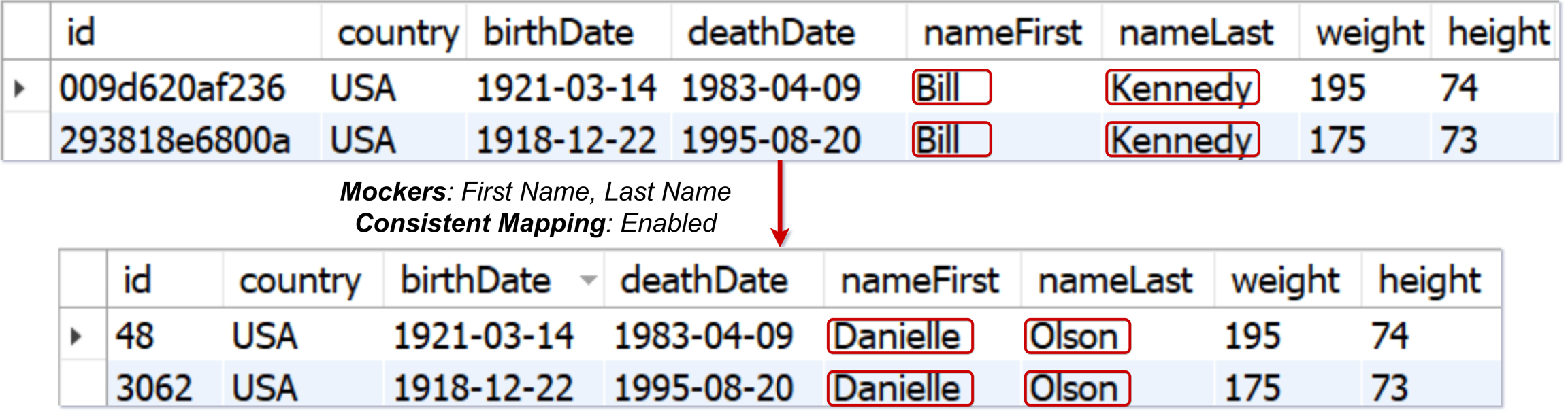

Consistent Mapping with Mockers is applied to players table

If you enable consistent mapping, the values will be consistently mapped to the same value across the tables. For example, we enabled consistent mapping for two columns: "nameFirst" and "nameLast". We want to generate the same synthetic names and surnames (mockers) for the original names. See the illustrations from MySQL tables below, where mockers with consistent mapping map the name "Bill Kennedy" to "Danielle Olson".

Please note that other names can also be mapped to "Danielle" or "Olson"; however, whenever Syntho detects "Bill", it will always replace it with a mocker first name "Danielle". The same applies to "Kennedy" and "Olson" in the last name column. Consistency can be verified with other columns since they are duplicated without any change from source to destination, allowing original and synthetic tables to be matched for a better understanding of consistency.

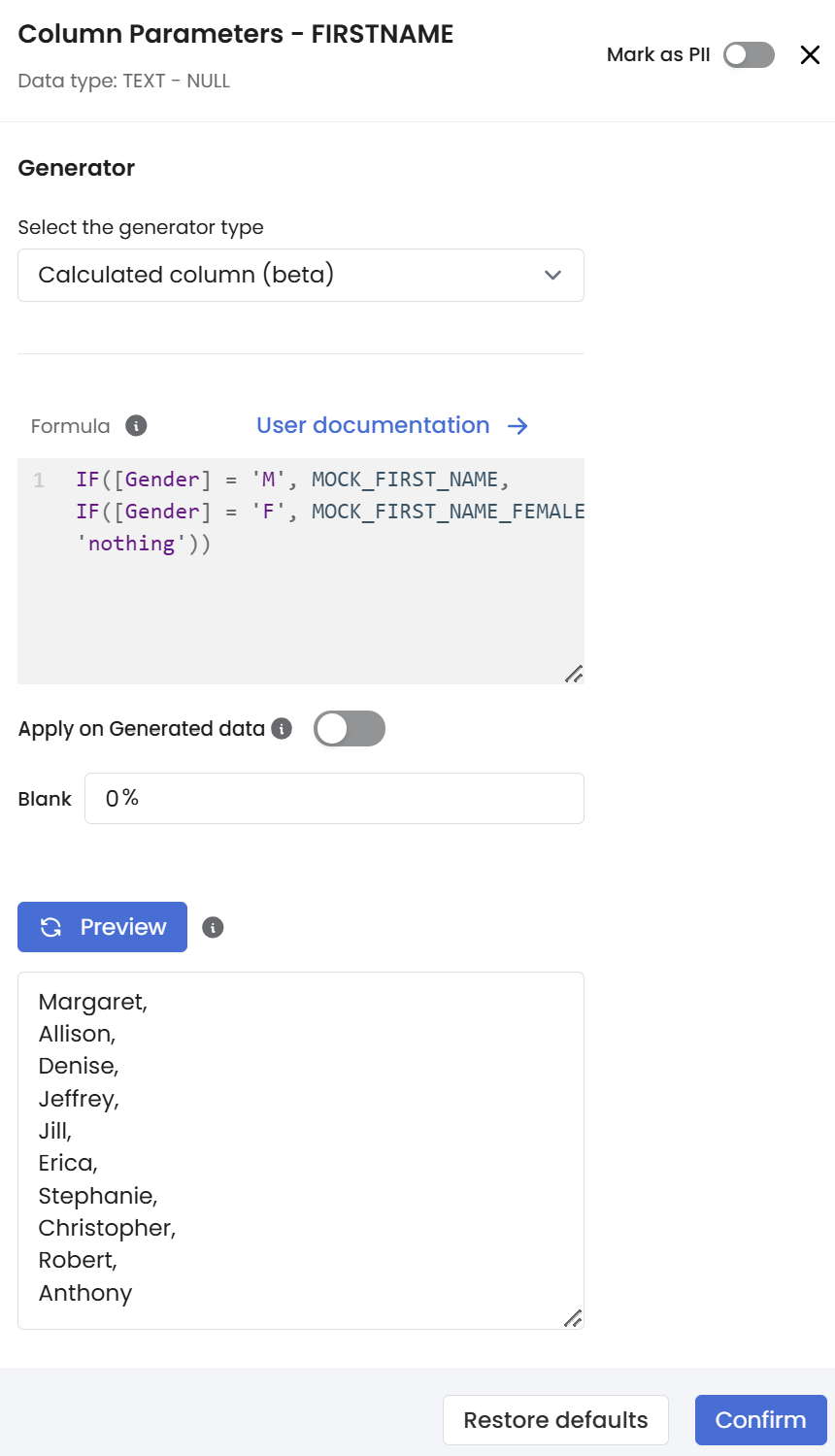

Calculated Columns is applied to players table

Calculated columns allow users to perform a broad spectrum of operations on data, ranging from simple arithmetic to complex logical and statistical computations. In below illustration, the following operation is applied:

Mask is applied to players table

The Mask generator modifies values directly without creating new records or altering the original dataset structure. This approach allows data to remain recognizable in its format while being anonymized, which is particularly useful for fields containing identifiable attributes. In this example, the Mask generator is applied to specific columns in the players table to ensure sensitive information is anonymized. Columns like country (Random Character Swap), birthDate (Datetime Noise), deathDate (Hasher), nameFirst (Format Preserving Encryption) and nameLast (Random Character Swap) are anonymized using the Mask generator with respective masking functions. These columns contain sensitive information that could potentially identify individuals. When consistent mapping is enabled in the Mask settings, identical input values across records will always map to the same masked output values.

Hash is applied to players table

In this example, the Hash generator is applied to key columns in the players table to ensure unique identifiers while preserving referential integrity. Key column, which is id, is hashed using a consistent algorithm to create anonymized yet unique values. This method ensures that identifiers in the players table can be anonymized without compromising the ability to link related records across tables. With unique hashing enabled, the output value will always be unique.

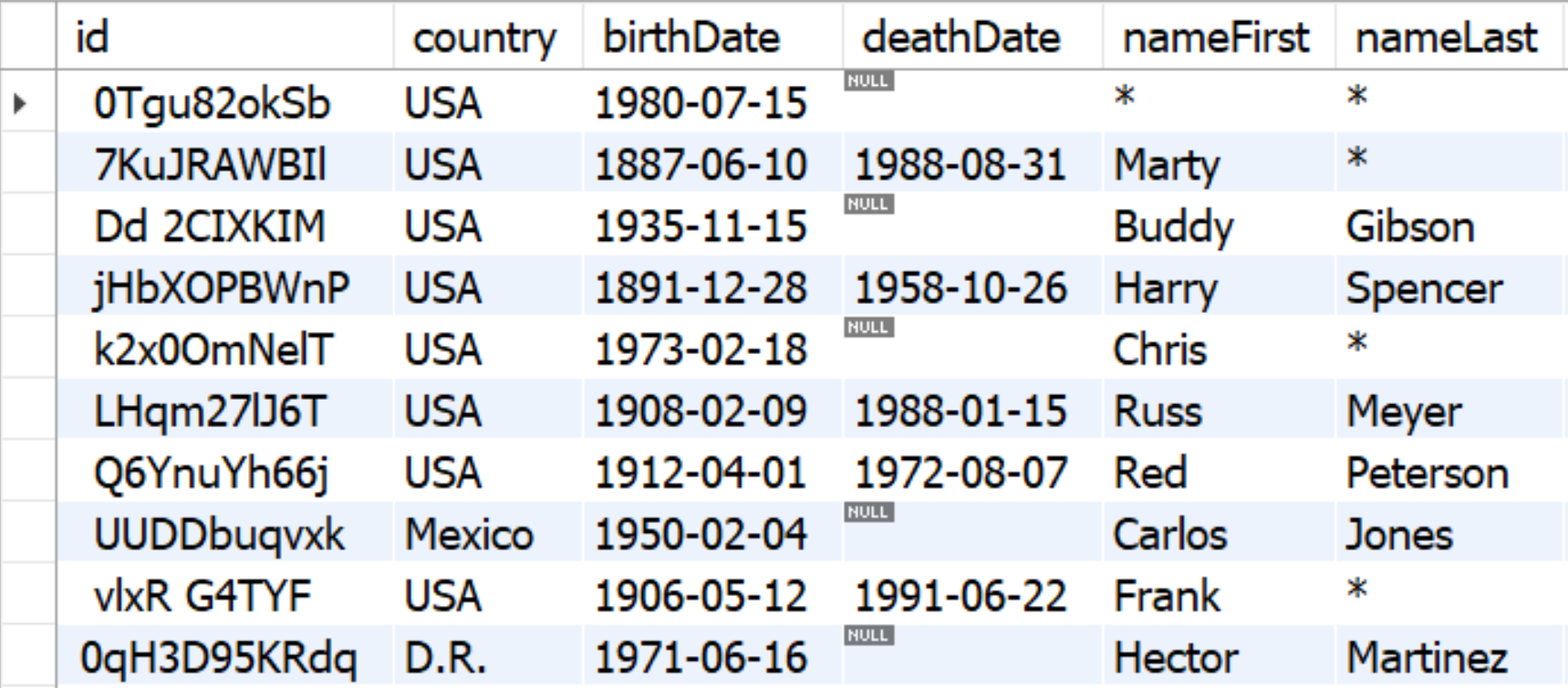

Key generator is applied to players table

In this example, Key Generators are used to generate unique keys or duplicate keys in the players table, ensuring referential integrity across related tables.

Duplicate copies the original key values exactly as they appear in the source data, preserving the relationships between primary and foreign keys. This ensures the key structure remains intact.

Hash converts original key values into hashed representations while preserving relationships across tables. The hashed values are obfuscated and irreversible, ensuring relational integrity is maintained.

Generate creates new, synthetic key values that do not correspond to the original keys. It produces entirely new keys and does not preserve the order or relationships of the source data.

Last updated

Was this helpful?